👋 Welcome to another edition of the Lay of the Land. Each month, I (why?) pick a technology trend and undertake outside-in-market research.

This is article 2/3 of Topic 2: AI inference. Today, we’ll dig deeper into the foundation of the inference value chain - semiconductors that do the math, and the top players fulfilling the seemingly infinite demand for compute.

I hope you find this useful enough to follow along!

As always, if you missed Article 1, I recommend checking it out first.

That was a longer gap between articles than planned :(

But hey, a business school student gotta spring break during spring break. Chi > Seoul > Kyoto > Tokyo > SF > Chi - the vibes were immaculate.

Alright, let’s start with a quick recap: AI inference is turning into the next big utility. This new power grid monetizes predictions on demand using models hosted on specialized silicon, nested in massive warehouses, and accessed with smart middleware – phew.

The demand for these systems is skyrocketing with the proliferation of:

LLMs / NLP

Image recognition and manipulation

Personalization and recommendation engines

Predictive analytics and forecasting

across businesses, governments, and individuals. Furthermore, drops in the cost of production and, consequently, Jevon’s paradox are multiplying demand. The application layer can choose to set up the infrastructure to support their prediction needs or can tap into the inference power grid when they choose to. Today, the latter makes more economic sense.

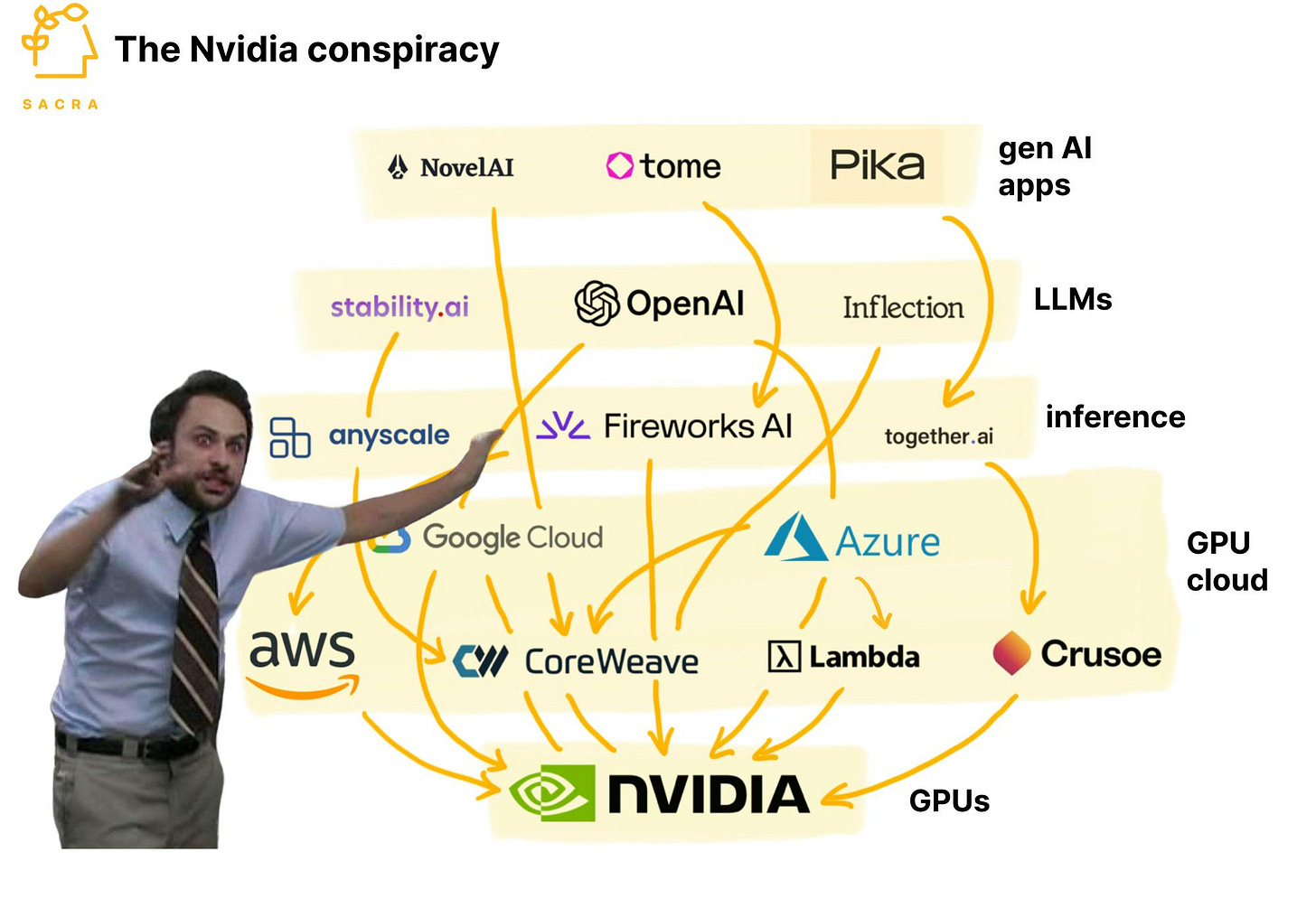

The value chain is a masterclass in specialization + vertical integration that’s sure to be a Business Strategy case real soon. No, seriously. It’s crazy out there. Some of these folks’ biggest customer is also their biggest competitor, lead times in the order of years because of supply chain constraints, lead times in the order of days for the same thing because you have more access, weaponized middlemen for reducing business risks, and everyone paying NVIDIA a cut, till some time ago.

Today’s article is all about semiconductors, and #3 will talk about the two key distribution plays (GPU clouds and inference engines) that gatekeep access to the silicon. In the process, we will map this ecosystem – name some names, pit them against each other, pick winners, and do other fun competitive landscape stuff.

Semiconductors for AI inference

We discussed how companies create systems, packaging GPUs, CPUs, Memory, and Networking components to power inference computing. These companies:

Set product strategy, evaluate trade-offs, and design the chip/accelerator

Test and undertake a thorough ‘Verification’ process

Outsource manufacturing to fabs or fabricate themselves (Intel)

Assemble and share reference designs with partners (OEM) for mass production

Release/maintain middleware for developers

They make money - a lot of it (70 %+ margins) - selling these servers to 1) Direct buyers - AIBs (add-in board partners), distributors, OEMs, and system integrators, or 2) Indirect buyers – secondary sales from system integrators and distributors. They also make money from licensing middleware and from installation/maintenance contracts. Finally, some of these players vertically integrate and double down as GPU clouds and Inference engines of their own.

Their costs are mainly 1) Chip BOM – wafer fabrication, assembly, testing and packaging, board and device costs, manufacturing support costs, including labor, and overhead associated with such purchases, final test yield fallout (high costs of wastage), inventory and warranty provisions, memory and component costs, tariffs, amortized goodwill IP and shipping costs, and also 2) Sales &Marketing (~3%), and 3) R&D (~10%). Easy to sell. Critical to stay ahead.

The Leaders - GPU Gang

are organizations that were already well established, selling similar hardware for another mature use case – graphics rendering for gaming and media. These generalized parallel computing units were tweaked to be aggregated and used in datacentres, and were made accessible to a large developer ecosystem through programming frameworks.

NVIDIA (80-90%):

Most mature and scaled inference player in the market. Anchored by the DGX H100 (70%), A100 (10-15%), and L4 for energy-efficient inference, it covers cloud-scale LLM inference, real-time vision, and edge workloads. With the introduction of Blackwell (B100) in 2024, NVIDIA claims 2x H100 level performance and better energy efficiency. The Blackwell architecture is also expected to be better suited for high-throughput inference. NVIDIA’s differentiation and strength lie in its end-to-end stack: CUDA, TensorRT, Triton Inference Server, and partnerships with all major CSPs (AWS, Azure, GCP, CoreWeave).

“(We are) a full-stack computing infrastructure company with data-centre-scale offerings that are reshaping industry. Our full-stack includes the foundational CUDA programming model that runs on all NVIDIA GPUs, as well as hundreds of domain-specific software libraries, software development kits, or SDKs, and Application Programming Interfaces, or APIs” – NVIDIA, 10K

In Q1 FY25, data center revenue hit $22.6B, up 427% YoY. Inference accounts for ~40–50% of GPU usage, translating to tens of billions in annual revenue. Margins remain above 70% for data center GPUs.

Their customer base is broad and well diversified across Hyperscalers, AI labs, Enterprise AI companies, automotive and manufacturing industries, and academia & government, who acquire GPUs directly or lease GPU capacity from secondary sellers (more in the next article). NVIDIA is also vertically integrated via investments in GPU clouds like Coreweave, and through their own inference cloud - NVIDIA GTX Cloud.

AMD (10-15%):

AMD and NVIDIA had a pretty close competition going for the gaming GPU market in the early 2010s, the former positioning itself as the more value for the buck option. NVIDIA broke away with performance improvements and then later investments in datacenter operations. AMD also failed to provide a CUDA alternative (ROCm is still maturing), further increasing the gap. Now they are playing catch-up and are doing a respectable job.

AMD with the MI300X GPUs focuses on memory-intensive operations with larger memory and higher bandwidth. While this works for large batch sizes, long context inference, and chain-of-though reasoning, it would be a disadvantage for the more common latency-focused inference we use every day. Looking back on day 1 of MBAi marketing, I am glad that they are positioning themselves differently. NVIDIA’s Blackwell trumps MI300X, but AMD should retain the lead with the MI350X.

Data Center segment revenue was a record $12.6 billion in ‘24, an increase of 94% compared to the prior year.

Intel and Huawei (1-3%) are other players.

The biggest limitation of using GPUs for Gen AI is the need to move data across compute cores and memory. The system architecture stores model weights on SRAMs and moves them to high-speed memory in HBM to make computations. This process is repeated many times in inference, resulting in heavy energy bills, low GPU utilization (~single digit % with lots of idle time), and bottlenecked throughputs.

Opportunity 👀

The Challengers

“With each new compute paradigm, technologists first attempted to adapt existing compute architectures to these workloads. But in each case, a new purpose-built architecture was ultimately needed to unlock the potential of the new paradigm.” – Cerebras S-1

Cerebras:

Makes really big chips. They pack 4 trillion transistors (5x H100) and 900000 cores (~ 52x H100) into one full wafer of silicon. These chips are 57x bigger, 10x faster, and reduce the computational power needed to move data across memory, as well as the material costs of memory units. Cerebras offers its chips for on-prem deployment or through the Cerebras cloud. They package these chips (Cerebras Systems, CSoft, Inference serving stack) and partner with their biggest customer, G42 (87% rev concentration), in order to go into the market as a GPU cloud.

The biggest caveat is that these chips are very expensive ($2–$3 million per unit). This is a particularly big issue for a manufacturing category that has yield issues - every defect is a big bill contributor for the chips that make it. Another big challenge is supply chain limitations. Getting foundry capacity is a pain as they can’t demand the same level of supplier loyalty that $3T NVIDIA can.

During the six months ended Jun ‘24, they generated $136.4 million. However, they have a heavy revenue concentration in one customer (G42, 87%) and had a net loss of $66.6 million. They’ve raised $700M in funds and have filed an S-1.

Groq:

Offers LPUs (Language processing unit) with a focus on ultra-low latency using an architecture called Tensor Streaming, wherein they do deterministic compute with on-chip memory. Translation: they hold all the memory on chip and they run a very predictable assembly line as opposed to the trial and errors of normal GPU operations. They have a cost advantage in using cheaper wafers and claim cheaper OpEx. Their product line includes the GroqChip processor (LPU), GroqCard accelerator, GroqNode server with 2x X86 CPUs and 8x LPUs, and GroqRack compute cluster made up from 8x GroqNodes plus a Groq Software Suite.

The problem with Groq is that they are doing what Cerebras is doing with regular-sized chips. This means they need a lot of chips. A standard Llama / Mixtral model would need 8 racks of 9 servers each with 8 chips per server. That’s a total of 576 chips, where a single H100 can fit the model at low batch sizes, and two chips have enough memory to support large batch sizes. This makes upfront investment and admin overheads very high. Also, they face supply chain constraints as well.

Groq’s strategy to deal with these issues is to build out capacity and offer inference APIs of its own. They are building a mega datacenter in the Middle East with Saudi Aramco and are expected to be a key Inference API engine for low-latency tasks using models they host/ license. Groq is valued at ~$3B, has raised about $1B, and has revenue commitments (Aramco) north of $1.5B alongside revenue from their inference engines and other chip sales.

Sambanova and D-Matrix

are other purpose-built accelerator companies competing in the same space. I would position them closer to Groq than Cerebras because of their focus on latency and because their chips aren’t massive.

The customers who said, “Isn’t it cheaper to do this ourselves?”

Not every hyperscaler is content paying NVIDIA a massive markup or competing for limited GPU supply. Some, with sufficient capital, infrastructure, and technical depth, have taken the in-house silicon route, building ASICs (Application-Specific Integrated Circuits): chips purpose-built for a narrow set of workloads, unlike the general-purpose GPUs discussed earlier.

While GPUs are designed for broad parallel processing and are great for training a wide range of models, ASICs are custom-designed for specific types of inference tasks, often delivering better efficiency, lower latency, and improved cost-performance, but with far less flexibility.

Google

The OG of AI ASICs. Google’s TPUs (Tensor Processing Units) were introduced in 2015 and have evolved through multiple generations, with TPU v5e recently released. These chips are optimized for Google's internal workloads (like Search, Ads, YouTube) and are also accessible via Google Cloud. TPUs are tightly integrated with TensorFlow and the broader Google AI stack, enabling high-efficiency inference at scale.

Amazon

AWS has developed two custom AI chips: Trainium (for model training) and Inferentia (for inference tasks). These are part of AWS’s effort to vertically integrate its infrastructure and reduce dependency on NVIDIA. Amazon claims Inferentia2 offers up to 4× better performance per watt than comparable GPUs. These chips are primarily used in AWS’s SageMaker and Bedrock offerings.

MSFT and META

are also building capabilities with custom ASIC Maia (with OpenAI) and by acquiring Furiosa (a South Korean chipmaker for $800M), respectively. The Meta acquisition of Furiosa, however, is in troubled waters with the chipmaker turning down Meta’s offer.

Huawei

Huawei has been pushing aggressively into custom AI silicon with its Ascend series of chips (especially Ascend 910 and 310). These ASICs are used in Huawei’s cloud infrastructure and increasingly power AI workloads across China, especially in response to export restrictions on high-end GPUs from Western companies.

ASICs are a natural fit for tech giants with scale, stable workloads, and full-stack control. They offer better cost-efficiency, power savings, and performance for specific inference tasks - but at the expense of flexibility. Unlike GPUs, ASICs are hardwired for certain architectures and can quickly become obsolete if models evolve. They're a high-reward, high-risk bet: great for steady, known workloads, but less ideal for anyone chasing the bleeding edge.

For that reason, Hyperscalers will still need GPUs and Accelerators for many of their general use cases and to act as inference resellers. But they will move some of their AI workloads to ASICs, in order to derisk supply irregularities and also enable hyper customization for certain use cases - An ASIC for Google search, an ASIC for MSFT Copilot, etc.

SWOT analysis

Clearly there is space for each of the above players to contribute and maybe even co-exist. How about a comparative analysis - MBA style?

Industry risks

Finally, before wrapping up, here are some industry-wide risks that would affect all our players:

Supplier risks - TSMC and the HBM folks say they need a break.

Geopolitics - Tariffs, tariffs, tariffs. Also, strategic embargoes.

Market risks - what if the hype dies down and there is oversupply?

CapEx risks - Payback timelines, debt structures meet market risks

Technology risks

On-device - becomes the norm and eats up demand.

Photonics - a new/energy efficient paradigm.

Quantum - compute on steroids, changing everything.

Model architecture - transformers are replaced.

That’s all for today! In Part 3, we’ll move from the chips to the gates. Who actually controls access to this new compute power grid? We’ll dive into the distribution layer - the GPU clouds and inference engines that make silicon accessible to developers and enterprises alike.

If you liked the content and would like to support my efforts, here are some ways:

Subscribe! It’ll help me get new updates to you easily

Please spread the word - it’s always super encouraging when more folks engage with your project. Any S/O would be much appreciated.

Drop your constructive thoughts in the comments below - point out mistakes, suggest alternate angles, requests for future topics, or post other helpful resources