AI on the (Near) edge (1/3) - MicroDCs and Telco MECs

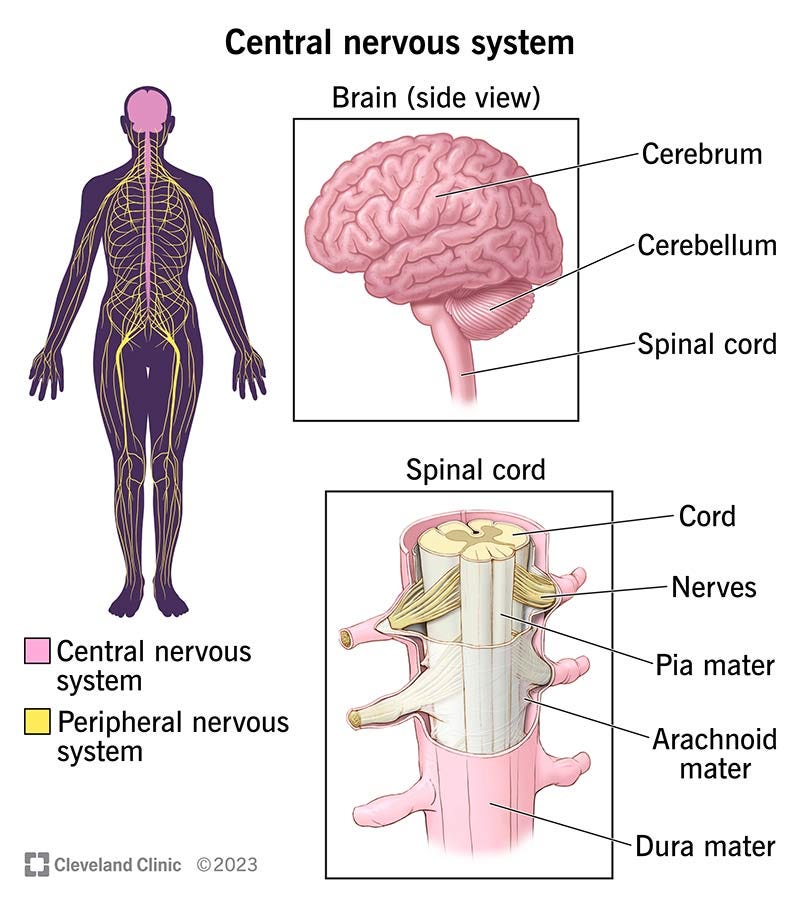

The spinal cord of the distributed AI nervous system

👋 Welcome to a new edition of the Lay of the Land. Each month, I (why?) pick a technology trend and undertake outside-in-market research for the opportunities they present.

This is article 1/3 of Topic 3: AI on the Edge. We’ll lay out the basics of edge computing, break down how compute workloads hop from devices to micro-DCs (data centers) and telco MECs, the business variables that decide the landing zone, and the suppliers jockeying for those GPU hours.

Hope you find this useful enough to follow along!

Also, if you missed Topics 1 and 2, (strong recommend, if you are new to language models and inference)

Your brain wades through GBs of sensory noise every second, yet almost none of it ever reaches you. A lightning-fast Type 1 layer - a tag team of the retina, mid-brain, and spinal reflex arcs - screens the input, discards the noise, and fires only high-risk or high-value signals up the chain. By filtering on the fly, it shields your cortex from overload and shaves precious milliseconds off every reaction.

Street-corner CCTV cameras borrow the same trick. An on-device chip scans the video feed, skips rustling leaves, locks onto a loiterer, and sends a ten-second clip to the cloud for heavyweight face matching. This selective hand-off is Edge Computing: compute data where it's born, with an option of escalating when needed. The payoff is lower delay, slimmer bandwidth, and tighter privacy, turning phones, lampposts, factory lines, and hospital rooms into ‘smart’ outposts. Add a compact neural net and you get Edge AI: a pocket translator, cameras that flag trespassers, turbines that predict failure, earbuds that transcribe whispers - all without begging a distant GPU for help. In the sections ahead, we’ll unpack the hardware, networks, and business models bringing this silicon nervous system to life.

What’s up at the edge?

The Edge includes computing resources and network hops along the path between data sources and cloud data centers. The key components of building an AI edge network are:

Computing hardware - CPUs/GPUs/NPUs/ASICs that infer

Real estate - your iPhone, Smart Fridge, or a MicroDC rack, which hosts (1)

Power and thermal - a battery or a mini DC plant that powers and protects (1)

Connectivity and network - Radio access network (5G) / WiFi / Fiber Optic cables connecting your phone, a microDC, and Azure USWest2.

Software-defined networking - the software brain that decides which data packet goes where and who runs what.

AI runtime & model optimisation - for loading up optimized (pruned/quantized, etc) models on (1) and running inference on them

Orchestration - batching requests, splitting loads between compute cores, and Kubernetes-ing the hardware.

Local storage, CDNs & data pipeline - to support data movement that happens in parallel.

Identity, policy, and billing

Support and operations

The power of compute required / available, cost of energy and real estate, latency/egress sensitivity, and the computing use case will determine the ideal edge architecture. Providers balance these trade-offs to build a distributed network that places compute in the cloud, Near it, or Far from it.

The Near Edge

Far edge packs compute not only into the smart device itself but also into the on-prem gateways or industrial routers sitting a few feet away, and Near edge, a short hop up the network in a telecom companies regional data center or a dedicated micro-datacenter that represents your locality.

An AI metro micro-DC (a few racks of GPUs/NPUs, ≈ 100-500 TOPS at 10-20 kW) delivers ≈ 5-20 ms round-trip latency: far more compute and energy than a phone, far less than a hyperscale region, trading power for proximity. While an AI MEC (Multi-access edge computing) is usually a telco-owned sled inside their RAN/central office (10-100 TOPS at 1-4 kW) that hits ≈ 1-10 ms thanks to direct radio hooks: higher muscle and draw than a handset, leaner than a microDC, optimised for ultra-low latency. Both of these compute centers were built out to provide services like media delivery (CDN caches), low-ping multiplayer games, filtering data at the feed, and securing access. MECs would additionally take care of local RAN software needs for the host station. Although a promising pitch, MECs never quite lived up to demand.

New life with AI? Here are some compelling reasons:

Low latency and UX lifts are important for real-time AI

Bandwidth as a bottleneck - Need for local pre-filtering for real-time voice AI use cases like translation and the io pendant?

AI for Random Access Networks (RAN) - Important network functions like secondary carrier prediction, antenna tilting, cell handover, link adaptation, and rogue drone detection are all use cases that AI can solve.

AI on devices is limited by battery and heating issues.

The architectures below, as listed by S. Barros in Solving AI Foundational Model Latency with Telco Infrastructure, are representative (not confirmed with real examples) of LLM inference architectures that could be serviced by the MEC / Regional DC/ Core cloud combo.

Also here are some MECs and MicroDCs ‘Near’ me:

AWS Wavelength Zone on Verizon 5G Edge (MEC), Chicago

AT&T Multi-access Edge node @ MxD (MEC) - Goose Island

EdgeConneX Chicago EDC

Equinix Metal “CH” metro (MicroDC / bare-metal as-a-service)

Next up, let’s explore the…

Skipping past some of the other inputs, the Near Edge is operated in strategically located facilities, via two main supply models:

Telco MECs x Cloud Servers (Hyperscalers / GPU clouds)

Telco + hyperscaler MEC roll-outs start with a commercial MoU that fixes the revenue split (AT&T keeps a share of every Azure edge instance; Verizon earns a cut of Vultr’s GPU-as-a-service hours) and defines service-level targets. Next, joint site & capacity planning selects Teleco-owned central-office spaces or colocation spaces within microDCs, with enough power, cooling, and dark fiber to the parent cloud region; Microsoft and AT&T size racks for hardware that powers Azure Operator Nexus (for RAN network functions as well as providing edge zones), while Verizon and Vultr reserve data-center pods inside Verizon’s AI-Connect footprint. During hardware procurement, the telco likely funds the basic rack (built by DC cos like Vapor, Equinix), but the hyperscaler (or GPU cloud) buys, packages, and provides the servers, most often keeping depreciation on its books.

Once the gear lands, teams complete network & control-plane integration: a private wave links the MEC to the parent Azure region; the hyperscaler extends its IAM and billing to charge an “edge premium”, while the telco exposes APIs. Finally, a joint launch & operations phase splits duties—maintenance, utilities, reliability, etc.

For developers, nothing changes: they toggle on edge zones, pay a latency premium (plausibly ~25% - variable based on location), and keep using the same Azure / Vultr CLI. They can use Azure Foundry Local to drop an ONNX-optimized model bundle onto these edge clusters and run inference on them. For end users, their applications are faster and more available.

The same service is provided by AWS Wavelength with partners like Verizon and Vodafone.

Micro DCs x Cloud Servers

This one also starts with a commercial MoU or long-term lease: the colo landlord (e.g., Equinix, Edgeconnex or Vapor IO) guarantees a block of powered floor space and dense cross-connects (powered by telcos and wholesale carriers like Zayo), while the hyperscaler commits to fill those racks for 5–10 years and pays a wholesale $/kW rate or enters a revenue-sharing JV.

During site & capacity planning, the partners pick a metro facility that can hit <20 ms RTT to users and deliver 10–20 kW per rack. In the hardware split, the colo supplies the shells, PDUs, and cooling, but the hyperscaler (or a GPU cloud such as CoreWeave) buys, integrates, and owns the NVIDIA servers and 400Gb switches, keeping depreciation on its books.

Similar to MECs, a private fiber wave is lit back to the parent region, and the cloud provider extends its IAM, billing, and monitoring, via products like AWS Local Zones, so the new metro appears as an extra zone inside the familiar console. Vapor IO’s Anthos and Outposts integrations show the same pattern, with Google or AWS plugging their control planes into the Kinetic Grid via API and automated telemetry. Developer and end-user experience remain the same.

Vapor also sells a managed Zero Gap AI service with Supermicro servers built on NVIDIA’s GH200 MGX platform that it co-owns and operates, exposing GPU minutes as an on-demand cloud.

CDN PoPs:

Akamai and Cloudflare are independent CDN providers with infrastructure (servers) hosted across the globe. These Point of Presence servers (PoPs) provide content delivery, DNS routing, and many other dev tooling services that act as a layer between you and the internet. These mini-servers, often colocated in DC facilities, also provided lightweight storage and edge computing capabilities - serverless functions/objects, etc. In the last few years, they have started retrofitting their top PoPs with GPUs and have also started building out Super PoP clusters.

Workers AI (Cloudflare) and the Akamai Connected Cloud are inference solutions built on this PoP network. Workers AI focuses on smaller models made available for cheap at low latencies, while Akamai claims to be able to support bigger models by virtue of their Super PoPs. Their big edge over MECs and MicroDCs is their ubiquity since they are present everywhere, and in the case of Workers.ai, the ease of serverless inference and low costs.

That’s all for today! In Part 2, we’ll move from the near to the far - all the way to the factory floor and the lamppost - to see how 15-watt NPUs and gateway routers keep the lights on when the cloud goes dark.

If you liked the content and would like to support my efforts, here are some ways:

Subscribe! It’ll help me get new updates to you easily

Please spread the word - it’s always super encouraging when more folks engage with your project. Any S/O would be much appreciated.

Drop your constructive thoughts in the comments below - point out mistakes, suggest alternate angles, request future topics, or post other helpful resources